Machine Learning with Spark¶

Feng Li¶

Central University of Finance and Economics¶

feng.li@cufe.edu.cn¶

Course home page: https://feng.li/distcomp¶

Machine Learning Library¶

MLlib is Spark’s machine learning (ML) library. Its goal is to make practical machine learning scalable and easy. At a high level, it provides tools such as:

- ML Algorithms: common learning algorithms such as classification, regression, clustering, and collaborative filtering

- Featurization: feature extraction, transformation, dimensionality reduction, and selection

- Pipelines: tools for constructing, evaluating, and tuning ML Pipelines

- Persistence: saving and load algorithms, models, and Pipelines

- Utilities: linear algebra, statistics, data handling, etc.

MLlib APIs¶

The RDD-based APIs in the

spark.mllibpackage have entered maintenance mode.The primary Machine Learning API for Spark is now the DataFrame-based API in the

spark.mlpackage.Why the DataFrame-based API?

DataFrames provide a more user-friendly API than RDDs. The many benefits of DataFrames include Spark Datasources, SQL/DataFrame queries, Tungsten and Catalyst optimizations, and uniform APIs across languages.

The DataFrame-based API for MLlib provides a uniform API across ML algorithms and across multiple languages.

DataFrames facilitate practical ML Pipelines, particularly feature transformations. See the Pipelines guide for details.

Start a Spark Session¶

import findspark ## Only needed when you run spark witin Jupyter notebook

findspark.init()

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder\

.config("spark.executor.memory", "2g")\

.config("spark.cores.max", "2")\

.master("spark://master:7077")\

.appName("Python Spark").getOrCreate() # using spark server

spark

SparkSession - in-memory

Correlation¶

Calculating the correlation between two series of data is a common operation in Statistics.

The

spark.mlprovides the flexibility to calculate pairwise correlations among many series. The supported correlation methods are currently Pearson’s and Spearman’s correlation.

from pyspark.ml.linalg import Vectors

from pyspark.ml.stat import Correlation

data = [(Vectors.sparse(4, [(0, 1.0), (3, -2.0)]),),

(Vectors.dense([4.0, 5.0, 0.0, 3.0]),),

(Vectors.dense([6.0, 7.0, 0.0, 8.0]),),

(Vectors.sparse(4, [(0, 9.0), (3, 1.0)]),)]

df = spark.createDataFrame(data, ["features"])

r1 = Correlation.corr(df, "features").head()

print("Pearson correlation matrix:\n" + str(r1[0]))

r2 = Correlation.corr(df, "features", "spearman").head()

print("Spearman correlation matrix:\n" + str(r2[0]))

Pearson correlation matrix:

DenseMatrix([[1. , 0.05564149, nan, 0.40047142],

[0.05564149, 1. , nan, 0.91359586],

[ nan, nan, 1. , nan],

[0.40047142, 0.91359586, nan, 1. ]])

Spearman correlation matrix:

DenseMatrix([[1. , 0.10540926, nan, 0.4 ],

[0.10540926, 1. , nan, 0.9486833 ],

[ nan, nan, 1. , nan],

[0.4 , 0.9486833 , nan, 1. ]])

Summarizer¶

The

spark.mlprovides vector column summary statistics for Dataframe through Summarizer.Available metrics are the column-wise

max,min,mean,variance, and number ofnonzeros, as well as the totalcount.

sc = spark.sparkContext # make a spakr context for RDD

from pyspark.ml.stat import Summarizer

from pyspark.sql import Row

from pyspark.ml.linalg import Vectors

df = sc.parallelize([Row(weight=1.0, features=Vectors.dense(1.0, 1.0, 1.0)),

Row(weight=0.0, features=Vectors.dense(1.0, 2.0, 3.0))]).toDF()

# create summarizer for multiple metrics "mean" and "count"

summarizer = Summarizer.metrics("mean", "count")

# compute statistics for multiple metrics with weight

df.select(summarizer.summary(df.features, df.weight)).show(truncate=False)

# compute statistics for multiple metrics without weight

df.select(summarizer.summary(df.features)).show(truncate=False)

# compute statistics for single metric "mean" with weight

df.select(Summarizer.mean(df.features, df.weight)).show(truncate=False)

# compute statistics for single metric "mean" without weight

df.select(Summarizer.mean(df.features)).show(truncate=False)

+-----------------------------------+ |aggregate_metrics(features, weight)| +-----------------------------------+ |[[1.0,1.0,1.0], 1] | +-----------------------------------+ +--------------------------------+ |aggregate_metrics(features, 1.0)| +--------------------------------+ |[[1.0,1.5,2.0], 2] | +--------------------------------+ +--------------+ |mean(features)| +--------------+ |[1.0,1.0,1.0] | +--------------+ +--------------+ |mean(features)| +--------------+ |[1.0,1.5,2.0] | +--------------+

Machine Learning Pipelines¶

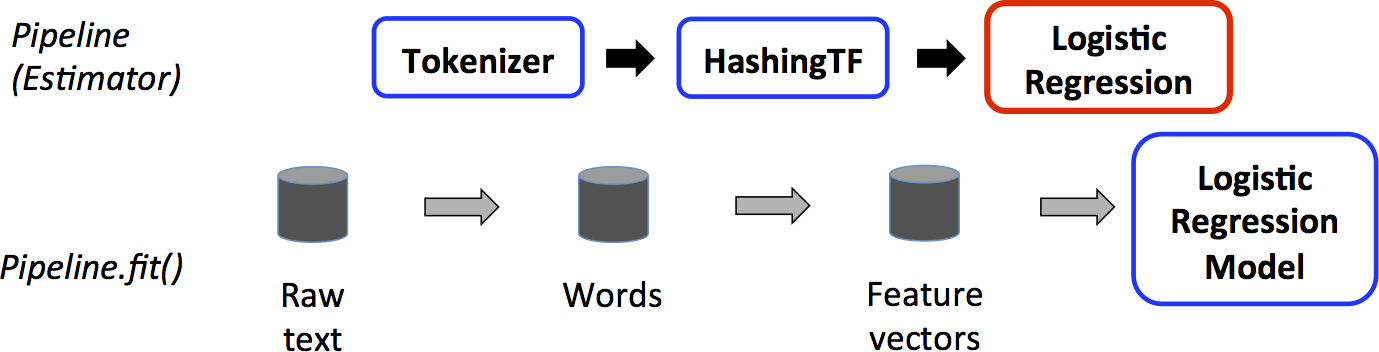

MLlib standardizes APIs for machine learning algorithms to make it easier to combine multiple algorithms into a single pipeline, or workflow.

The pipeline concept is mostly inspired by the

scikit-learnproject.DataFrame: This ML API uses DataFrame from Spark SQL as an ML dataset, which can hold a variety of data types. E.g., a DataFrame could have different columns storing text, feature vectors, true labels, and predictions.Transformer: A Transformer is an algorithm which can transform one DataFrame into another DataFrame. E.g., an ML model is a Transformer which transforms a DataFrame with features into a DataFrame with predictions.Estimator: An Estimator is an algorithm which can be fit on a DataFrame to produce a Transformer. E.g., a learning algorithm is an Estimator which trains on a DataFrame and produces a model.Pipeline: A Pipeline chains multiple Transformers and Estimators together to specify an ML workflow.Parameter: All Transformers and Estimators now share a common API for specifying parameters.

Pipline example: Logistic Regression¶

from pyspark.ml.linalg import Vectors

from pyspark.ml.classification import LogisticRegression

# Prepare training data from a list of (label, features) tuples.

training = spark.createDataFrame([

(1.0, Vectors.dense([0.0, 1.1, 0.1])),

(0.0, Vectors.dense([2.0, 1.0, -1.0])),

(0.0, Vectors.dense([2.0, 1.3, 1.0])),

(1.0, Vectors.dense([0.0, 1.2, -0.5]))], ["label", "features"])

# Prepare test data

test = spark.createDataFrame([

(1.0, Vectors.dense([-1.0, 1.5, 1.3])),

(0.0, Vectors.dense([3.0, 2.0, -0.1])),

(1.0, Vectors.dense([0.0, 2.2, -1.5]))], ["label", "features"])

# Create a LogisticRegression instance. This instance is an Estimator.

lr = LogisticRegression(maxIter=10, regParam=0.01)

# Print out the parameters, documentation, and any default values.

print("LogisticRegression parameters:\n" + lr.explainParams() + "\n")

LogisticRegression parameters: aggregationDepth: suggested depth for treeAggregate (>= 2). (default: 2) elasticNetParam: the ElasticNet mixing parameter, in range [0, 1]. For alpha = 0, the penalty is an L2 penalty. For alpha = 1, it is an L1 penalty. (default: 0.0) family: The name of family which is a description of the label distribution to be used in the model. Supported options: auto, binomial, multinomial (default: auto) featuresCol: features column name. (default: features) fitIntercept: whether to fit an intercept term. (default: True) labelCol: label column name. (default: label) lowerBoundsOnCoefficients: The lower bounds on coefficients if fitting under bound constrained optimization. The bound matrix must be compatible with the shape (1, number of features) for binomial regression, or (number of classes, number of features) for multinomial regression. (undefined) lowerBoundsOnIntercepts: The lower bounds on intercepts if fitting under bound constrained optimization. The bounds vector size must beequal with 1 for binomial regression, or the number oflasses for multinomial regression. (undefined) maxIter: max number of iterations (>= 0). (default: 100, current: 10) predictionCol: prediction column name. (default: prediction) probabilityCol: Column name for predicted class conditional probabilities. Note: Not all models output well-calibrated probability estimates! These probabilities should be treated as confidences, not precise probabilities. (default: probability) rawPredictionCol: raw prediction (a.k.a. confidence) column name. (default: rawPrediction) regParam: regularization parameter (>= 0). (default: 0.0, current: 0.01) standardization: whether to standardize the training features before fitting the model. (default: True) threshold: Threshold in binary classification prediction, in range [0, 1]. If threshold and thresholds are both set, they must match.e.g. if threshold is p, then thresholds must be equal to [1-p, p]. (default: 0.5) thresholds: Thresholds in multi-class classification to adjust the probability of predicting each class. Array must have length equal to the number of classes, with values > 0, excepting that at most one value may be 0. The class with largest value p/t is predicted, where p is the original probability of that class and t is the class's threshold. (undefined) tol: the convergence tolerance for iterative algorithms (>= 0). (default: 1e-06) upperBoundsOnCoefficients: The upper bounds on coefficients if fitting under bound constrained optimization. The bound matrix must be compatible with the shape (1, number of features) for binomial regression, or (number of classes, number of features) for multinomial regression. (undefined) upperBoundsOnIntercepts: The upper bounds on intercepts if fitting under bound constrained optimization. The bound vector size must be equal with 1 for binomial regression, or the number of classes for multinomial regression. (undefined) weightCol: weight column name. If this is not set or empty, we treat all instance weights as 1.0. (undefined)

# Learn a LogisticRegression model. This uses the parameters stored in lr.

model1 = lr.fit(training)

# Since model1 is a Model (i.e., a transformer produced by an Estimator),

# we can view the parameters it used during fit().

# This prints the parameter (name: value) pairs, where names are unique IDs for this

# LogisticRegression instance.

print("Model 1 was fit using parameters: ")

print(model1.extractParamMap())

Model 1 was fit using parameters:

{Param(parent='LogisticRegression_1f95b4862951', name='aggregationDepth', doc='suggested depth for treeAggregate (>= 2)'): 2, Param(parent='LogisticRegression_1f95b4862951', name='elasticNetParam', doc='the ElasticNet mixing parameter, in range [0, 1]. For alpha = 0, the penalty is an L2 penalty. For alpha = 1, it is an L1 penalty'): 0.0, Param(parent='LogisticRegression_1f95b4862951', name='family', doc='The name of family which is a description of the label distribution to be used in the model. Supported options: auto, binomial, multinomial.'): 'auto', Param(parent='LogisticRegression_1f95b4862951', name='featuresCol', doc='features column name'): 'features', Param(parent='LogisticRegression_1f95b4862951', name='fitIntercept', doc='whether to fit an intercept term'): True, Param(parent='LogisticRegression_1f95b4862951', name='labelCol', doc='label column name'): 'label', Param(parent='LogisticRegression_1f95b4862951', name='maxIter', doc='maximum number of iterations (>= 0)'): 10, Param(parent='LogisticRegression_1f95b4862951', name='predictionCol', doc='prediction column name'): 'prediction', Param(parent='LogisticRegression_1f95b4862951', name='probabilityCol', doc='Column name for predicted class conditional probabilities. Note: Not all models output well-calibrated probability estimates! These probabilities should be treated as confidences, not precise probabilities'): 'probability', Param(parent='LogisticRegression_1f95b4862951', name='rawPredictionCol', doc='raw prediction (a.k.a. confidence) column name'): 'rawPrediction', Param(parent='LogisticRegression_1f95b4862951', name='regParam', doc='regularization parameter (>= 0)'): 0.01, Param(parent='LogisticRegression_1f95b4862951', name='standardization', doc='whether to standardize the training features before fitting the model'): True, Param(parent='LogisticRegression_1f95b4862951', name='threshold', doc='threshold in binary classification prediction, in range [0, 1]'): 0.5, Param(parent='LogisticRegression_1f95b4862951', name='tol', doc='the convergence tolerance for iterative algorithms (>= 0)'): 1e-06}

# We may alternatively specify parameters using a Python dictionary as a paramMap

paramMap = {lr.maxIter: 20}

paramMap[lr.maxIter] = 30 # Specify 1 Param, overwriting the original maxIter.

paramMap.update({lr.regParam: 0.1, lr.threshold: 0.55}) # Specify multiple Params.

# You can combine paramMaps, which are python dictionaries.

paramMap2 = {lr.probabilityCol: "myProbability"} # Change output column name

paramMapCombined = paramMap.copy()

paramMapCombined.update(paramMap2)

# Now learn a new model using the paramMapCombined parameters.

# paramMapCombined overrides all parameters set earlier via lr.set* methods.

model2 = lr.fit(training, paramMapCombined)

print("Model 2 was fit using parameters: ")

print(model2.extractParamMap())

Model 2 was fit using parameters:

{Param(parent='LogisticRegression_1f95b4862951', name='aggregationDepth', doc='suggested depth for treeAggregate (>= 2)'): 2, Param(parent='LogisticRegression_1f95b4862951', name='elasticNetParam', doc='the ElasticNet mixing parameter, in range [0, 1]. For alpha = 0, the penalty is an L2 penalty. For alpha = 1, it is an L1 penalty'): 0.0, Param(parent='LogisticRegression_1f95b4862951', name='family', doc='The name of family which is a description of the label distribution to be used in the model. Supported options: auto, binomial, multinomial.'): 'auto', Param(parent='LogisticRegression_1f95b4862951', name='featuresCol', doc='features column name'): 'features', Param(parent='LogisticRegression_1f95b4862951', name='fitIntercept', doc='whether to fit an intercept term'): True, Param(parent='LogisticRegression_1f95b4862951', name='labelCol', doc='label column name'): 'label', Param(parent='LogisticRegression_1f95b4862951', name='maxIter', doc='maximum number of iterations (>= 0)'): 30, Param(parent='LogisticRegression_1f95b4862951', name='predictionCol', doc='prediction column name'): 'prediction', Param(parent='LogisticRegression_1f95b4862951', name='probabilityCol', doc='Column name for predicted class conditional probabilities. Note: Not all models output well-calibrated probability estimates! These probabilities should be treated as confidences, not precise probabilities'): 'myProbability', Param(parent='LogisticRegression_1f95b4862951', name='rawPredictionCol', doc='raw prediction (a.k.a. confidence) column name'): 'rawPrediction', Param(parent='LogisticRegression_1f95b4862951', name='regParam', doc='regularization parameter (>= 0)'): 0.1, Param(parent='LogisticRegression_1f95b4862951', name='standardization', doc='whether to standardize the training features before fitting the model'): True, Param(parent='LogisticRegression_1f95b4862951', name='threshold', doc='threshold in binary classification prediction, in range [0, 1]'): 0.55, Param(parent='LogisticRegression_1f95b4862951', name='tol', doc='the convergence tolerance for iterative algorithms (>= 0)'): 1e-06}

# Make predictions on test data using the Transformer.transform() method.

# LogisticRegression.transform will only use the 'features' column.

# Note that model2.transform() outputs a "myProbability" column instead of the usual

# 'probability' column since we renamed the lr.probabilityCol parameter previously.

prediction = model2.transform(test)

result = prediction.select("features", "label", "myProbability", "prediction") \

.collect()

for row in result:

print("features=%s, label=%s -> prob=%s, prediction=%s"

% (row.features, row.label, row.myProbability, row.prediction))

features=[-1.0,1.5,1.3], label=1.0 -> prob=[0.057073041710340604,0.9429269582896593], prediction=1.0 features=[3.0,2.0,-0.1], label=0.0 -> prob=[0.9238522311704118,0.07614776882958824], prediction=0.0 features=[0.0,2.2,-1.5], label=1.0 -> prob=[0.10972776114779727,0.8902722388522027], prediction=1.0

Pipline example: Decision tree classifier¶

from pyspark.ml import Pipeline

from pyspark.ml.classification import DecisionTreeClassifier

from pyspark.ml.feature import StringIndexer, VectorIndexer

from pyspark.ml.evaluation import MulticlassClassificationEvaluator

# Load the data stored in LIBSVM format as a DataFrame.

data = spark.read.format("libsvm").load("/data/sample_libsvm_data.txt")

# Index labels, adding metadata to the label column.

# Fit on whole dataset to include all labels in index.

labelIndexer = StringIndexer(inputCol="label", outputCol="indexedLabel").fit(data)

# Automatically identify categorical features, and index them.

# We specify maxCategories so features with > 4 distinct values are treated as continuous.

featureIndexer =\

VectorIndexer(inputCol="features", outputCol="indexedFeatures", maxCategories=4).fit(data)

# Split the data into training and test sets (30% held out for testing)

(trainingData, testData) = data.randomSplit([0.7, 0.3])

# Train a DecisionTree model.

dt = DecisionTreeClassifier(labelCol="indexedLabel", featuresCol="indexedFeatures")

# Chain indexers and tree in a Pipeline

pipeline = Pipeline(stages=[labelIndexer, featureIndexer, dt])

# Train model. This also runs the indexers.

model = pipeline.fit(trainingData)

# Make predictions.

predictions = model.transform(testData)

# Select example rows to display.

predictions.select("prediction", "indexedLabel", "features").show(5)

# Select (prediction, true label) and compute test error

evaluator = MulticlassClassificationEvaluator(

labelCol="indexedLabel", predictionCol="prediction", metricName="accuracy")

accuracy = evaluator.evaluate(predictions)

print("Test Error = %g " % (1.0 - accuracy))

treeModel = model.stages[2]

# summary only

print(treeModel)

+----------+------------+--------------------+ |prediction|indexedLabel| features| +----------+------------+--------------------+ | 1.0| 1.0|(692,[100,101,102...| | 1.0| 1.0|(692,[122,123,124...| | 1.0| 1.0|(692,[122,123,148...| | 1.0| 1.0|(692,[123,124,125...| | 1.0| 1.0|(692,[124,125,126...| +----------+------------+--------------------+ only showing top 5 rows Test Error = 0.0285714 DecisionTreeClassificationModel (uid=DecisionTreeClassifier_19583263959e) of depth 1 with 3 nodes

Pipline example: Clustering¶

from pyspark.ml.clustering import KMeans

from pyspark.ml.evaluation import ClusteringEvaluator

# Loads data.

dataset = spark.read.format("libsvm").load("/data/sample_kmeans_data.txt")

# Trains a k-means model.

kmeans = KMeans().setK(2).setSeed(1)

model = kmeans.fit(dataset)

# Make predictions

predictions = model.transform(dataset)

# Evaluate clustering by computing Silhouette score

evaluator = ClusteringEvaluator()

silhouette = evaluator.evaluate(predictions)

print("Silhouette with squared euclidean distance = " + str(silhouette))

Silhouette with squared euclidean distance = 0.9997530305375207

# Shows the result.

centers = model.clusterCenters()

print("Cluster Centers: ")

for center in centers:

print(center)

Cluster Centers: [0.1 0.1 0.1] [9.1 9.1 9.1]

Model Selection with Cross-Validation¶

from pyspark.ml.classification import LogisticRegression

from pyspark.ml.evaluation import BinaryClassificationEvaluator

from pyspark.ml.feature import HashingTF, Tokenizer

from pyspark.ml.tuning import CrossValidator, ParamGridBuilder

# Prepare training documents, which are labeled.

training = spark.createDataFrame([

(0, "a b c d e spark", 1.0),

(1, "b d", 0.0),

(2, "spark f g h", 1.0),

(3, "hadoop mapreduce", 0.0),

(4, "b spark who", 1.0),

(5, "g d a y", 0.0),

(6, "spark fly", 1.0),

(7, "was mapreduce", 0.0),

(8, "e spark program", 1.0),

(9, "a e c l", 0.0),

(10, "spark compile", 1.0),

(11, "hadoop software", 0.0)

], ["id", "text", "label"])

# Configure an ML pipeline, which consists of tree stages: tokenizer, hashingTF, and lr.

tokenizer = Tokenizer(inputCol="text", outputCol="words")

hashingTF = HashingTF(inputCol=tokenizer.getOutputCol(), outputCol="features")

lr = LogisticRegression(maxIter=10)

pipeline = Pipeline(stages=[tokenizer, hashingTF, lr])

# We now treat the Pipeline as an Estimator, wrapping it in a CrossValidator instance.

# This will allow us to jointly choose parameters for all Pipeline stages.

# A CrossValidator requires an Estimator, a set of Estimator ParamMaps, and an Evaluator.

# We use a ParamGridBuilder to construct a grid of parameters to search over.

# With 3 values for hashingTF.numFeatures and 2 values for lr.regParam,

# this grid will have 3 x 2 = 6 parameter settings for CrossValidator to choose from.

paramGrid = ParamGridBuilder() \

.addGrid(hashingTF.numFeatures, [10, 100, 1000]) \

.addGrid(lr.regParam, [0.1, 0.01]) \

.build()

crossval = CrossValidator(estimator=pipeline,

estimatorParamMaps=paramGrid,

evaluator=BinaryClassificationEvaluator(),

numFolds=2) # use 3+ folds in practice

# Run cross-validation, and choose the best set of parameters.

cvModel = crossval.fit(training)

# Prepare test documents, which are unlabeled.

test = spark.createDataFrame([

(4, "spark i j k"),

(5, "l m n"),

(6, "mapreduce spark"),

(7, "apache hadoop")

], ["id", "text"])

# Make predictions on test documents. cvModel uses the best model found (lrModel).

prediction = cvModel.transform(test)

selected = prediction.select("id", "text", "probability", "prediction")

for row in selected.collect():

print(row)

Row(id=4, text='spark i j k', probability=DenseVector([0.2581, 0.7419]), prediction=1.0) Row(id=5, text='l m n', probability=DenseVector([0.9186, 0.0814]), prediction=0.0) Row(id=6, text='mapreduce spark', probability=DenseVector([0.432, 0.568]), prediction=1.0) Row(id=7, text='apache hadoop', probability=DenseVector([0.6766, 0.3234]), prediction=0.0)

Lab¶

- Run a logistic regression with airdelay data.